An Introduction to the Kirkpatrick Model of Training Evaluation: From Reaction to Result

Everyone agrees that training is an indispensable function, but it is an expense to the organization. So, it is important to know if the effort, time, and money spent in training have yielded tangible results. Without evaluating the effectiveness of a training program, no one can know if the training was a success (or not!). That is why training evaluation is so critical for any organization.

All training has a purpose. The reason organizations go through the entire rigmarole of training employees is to make employees perform better at their job roles.

But all training does not necessarily follow this sequence. In fact, training can go completely wrong (as we training managers see all too frequently!).

What is Training Evaluation?

Training Evaluation is a process that critically examines a training program and determines its success or failure. Data is collected and analyzed, and its outcomes measured. Evaluation is critical to the success and continuing improvement of the organization as it assesses the training program and provides feedback on how to make it better.

A training program is deemed successful if the evaluation proves that learning has indeed taken place and learners have achieved the learning objectives that formed the basis for the training.

According to Donald Kirkpatrick, Professor at the University of Wisconsin and ex-president of the American Society for Training and Development (ATD), there are 3 reasons for evaluating training programs:

- To justify the cost of the training program itself

- To determine whether to continue or discontinue the program

- To learn how to improve future programs

There are several models of evaluation used to assess eLearning.

Explore these eHacks for successful eLearning implementation.

Common Methods of Evaluating Training Programs

- Kirkpatrick’s Model of Learning Evaluation: The most popular model of training evaluation

- Kaufman’s Model of Learning Evaluation: A 5-level model based on Kirkpatrick’s model

- Anderson’s Value of Learning Model: A 3-stage model that focuses on aligning training goals with the organization’s strategic goals

- Brinkerhoff’s Success Case Method: A model focusing on identifying the most and least successful training programs to improve future training initiatives

We’ll be taking a brief look at Kirkpatrick’s Model of Learning Evaluation in this blog.

A Brief Introduction to the Kirkpatrick’s Model of Learning Evaluation

The Kirkpatrick’s Model of Learning Evaluation is the oldest model used by the eLearning industry. It was developed in 1959 by Donald Kirkpatrick and was revised in 1975 and 1994. It is considered the gold standard for evaluating the effectiveness of learning, and has been tried and tested time and again, and yet again, only to be proved to be an extremely reliable method of evaluating the effectiveness of any learning program.

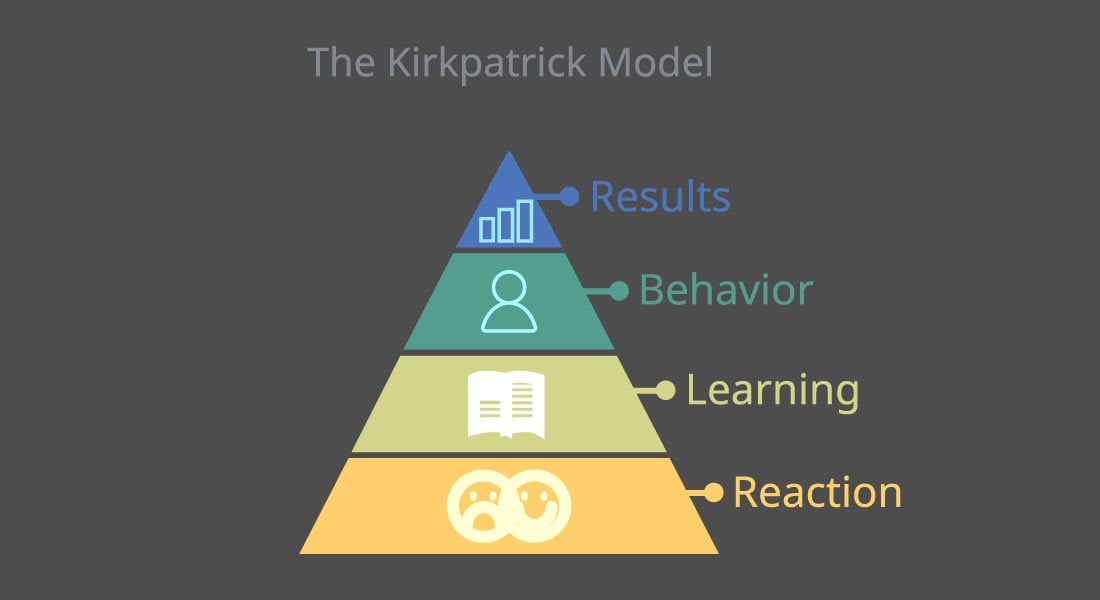

The Four Levels of Kirkpatrick’s Model of Training Evaluation

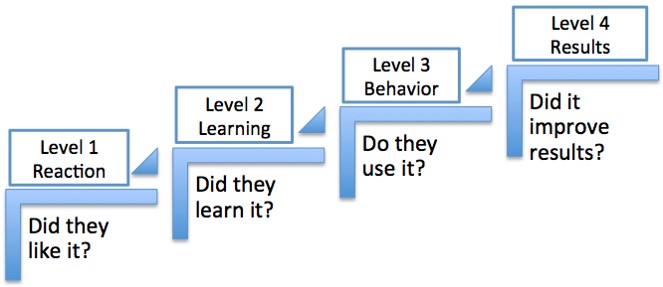

- Level 1: Reaction – How much the learners liked the program

- Level 2: Learning – How much learning took place

- Level 3: Behavior – The change in behavior with regard to job performance after the training

- Level 4: Results – The business outcome of doing the job differently after training

In 2010, Jim and Wendy Kirkpatrick clarified the 4 levels of the Kirkpatrick model with the New World Kirkpatrick Model. Measuring learning using the 4 levels of evaluation can give organizations a holistic view of their training program and of the changes (if any) that need to be made to improve it.

The advantage of Kirkpatrick’s model is that it can be used to evaluate both traditional classroom training and eLearning. As we move up the Kirkpatrick’s Model from level 1 to 4, the complexity of the techniques of evaluation increases. And so does the value of the data generated.

Due to this increasing complexity as we go to higher levels (3 and 4) in the Kirkpatrick model, many organizations limit their evaluation to the first two levels, leaving the most useful data on the training off the table.

Almost all organizations measure learners’ reaction to a training program to discover how they liked it (level 1), but less than 40% actually go to the trouble of digging deeper to measure the impact of training on business results. This can derail the entire evaluation exercise.

Let’s now look briefly into the four levels of evaluation.

Level 1: Reaction – Did the Learners Enjoy the Training?

This level checks the reaction of learners by asking questions such as:

- Did you like the program?

- Did you like the trainer/facilitator who delivered the subject?

- Did you like the venue, seating arrangements, and other aspects?

Evaluation at this level is usually done at the end of the program with the help of feedback sheets (‘happy sheets’). It has limited use and applicability because all it tells is whether learners are happy, and to a certain extent, how they rated the trainers.

But remember this. Learners may be very happy, but the training program may prove to be a total dud when it comes to improvement in performance.

Level 2: Learning – Did Knowledge Transfer Happen? How Much?

This level, used extensively in eLearning, is the most appropriate and practical level of evaluation. It is usually done through a pre- and a post-test usually by a paper and pencil test in the classroom. In eLearning, it is done through multiple choice questions with scores tracked on the Learning Management System (LMS).

Level 3: Behavior – Did the Learners’ Behavior Change (as it relates to their job tasks) because of the Training?

Level 3 tries to evaluate the behavioral change in learners after training.

- How many times have learners applied the knowledge and skills to their jobs?

- What is the effect of the new knowledge and skills on their performance?

How do we get answers to these questions?

Allow for some time after the training program and ask the trainers, supervisors, and others related to the learners’ jobs on whether the training has made any impact on learners’ performance.

A drawback of this level is that it is NOT very easy to gather accurate information on learners’ change in behavior and improvement in performance. And even if the data is collected, how valid and reliable will it be to assess the effectiveness of the training?

And finally, if the learners’ behavior has changed after the training, how much of that change can be attributed to the training? And how much to other factors such as motivation and change in managerial styles?

Level 4: Results – Did the Training have a Measurable Impact on Learners’ Performance?

This level tries to evaluate the improvement in:

- Productivity (in terms of sales, quality, reduced costs)

- Employee retention

- Customer satisfaction

The problem with this is that these are the terminal results all companies aim for. But the effectiveness of training can’t be linked to the achievement of business goals because they don’t happen with a single input such as training.

How do you know, for example, that the improvement of sales is due to a particular training on selling skills alone?

Final Thoughts

We will look at the different aspects of Kirkpatrick’s model of evaluation and how organizations can use it to their benefit in the blogs that follow. But in the meantime, if you want to know more about training evaluation, why not download this free eBook on ROI and eLearning – Myths and Realities?