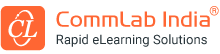

Delving a Little Deeper into the Kirkpatrick Model of Training Evaluation

Our previous blog gave a brief introduction to the Kirkpatrick Model of Evaluation and its impact on training. This blog will talk about the model in more detail. The Kirkpatrick Model of Training Evaluation describes four levels of evaluation for any training program.

Ways to Gather Data for 4 Levels of Training Evaluation

Level 1 – Reaction: Use surveys and happy sheets

Level 2 – Learning: Use pre- and post-training assessments

Level 3 – Behavior: Observation, assessments at regular intervals, performance reviews

Level 4 – Results: Have a test group and a control group to observe differences

Understanding the 4 Levels of the Kirkpatrick’s Model

Level 1 – Reaction (Did Learners Enjoy the Training?)

The Reaction level captures the learners’ reaction to the training session. This is the most basic level of evaluating a training program – gathering feedback from learners who have completed a training program on how they liked the program, about the training venue, the ease of participation in the program, if the trainer was good, about their overall experience of the program, and the like.

In short, this level is all about finding out how satisfying, engaging, and relevant learners found the experience.

This is how most organizations evaluate their training – with bosses or managers asking their employees informally as they walk out of a classroom session, “How did it go?” This off-hand question usually gets honest answers such as, “Too long,” or “The air conditioner was not working,” or “Very exciting and informative”.

A more formal way of evaluating training at the Reaction level is to get employees to fill out a questionnaire, feedback form, survey, or even an interview after completing the training program. Learners can be given ‘happy sheets’ at the end of a classroom or virtual classroom session to rate how satisfying, relevant, and engaging they found the training to be.

Download this beginner’s guide to understanding VILT.

However, while this data might be interesting, is not very useful to make decisions about improving the training program. As covered in the previous blog, the reason organizations go through the entire rigmarole of training employees is to improve employee performance. So, while it might be nice to know that learners are having a great time and are enjoying the training program, it does not tell us anything about how close (or far) we are in achieving our performance goal.

Level 2 – Learning (Did Knowledge Transfer Occur?)

This is where things start to get a bit more serious. Level 2 – Learning measures the extent to which learners acquire the intended knowledge, skills, attitude (KSA) based on their participation in the training. Get more details here.

As you can see, we now move from a superficial level of understanding the success of a training program to a deeper level – of understanding the extent of learning that has taken place, based on the employee’s participation in the program.

Level-two evaluation, used extensively in eLearning, is the most appropriate and practical level of evaluation. This can be done by observation, assessments, simulations, exercises, demonstration of skills, and skill practices.

To measure what was learned during training, one must measure the learner’s knowledge and skills:

- Before the training

- After the training

Comparing the before and after results will give you a clearer picture of the improvements that occurred due to the training.

When using multiple choice questions in eLearning assessments, care must be taken to ensure the questions are perfectly aligned with the learning objectives and content. If not, the skewed data generated from them may lead to unnecessary changes made to the program.

Level 3 – Behavior (Did the Training Change Learners’ Behavior?)

This level measures how for learners are able to apply what they learned back on the job. There must be a change in behavior after a successful training program – the question now is, “How much of what was learned is implemented?”

Having spent time and resources on training their teams to perform better at the workplace, training managers await results, eagerly – after all, the proof is in the pudding.

But the results are not always seen immediately; the most valid results are those that are collected over a period of time on:

- The change in behavior – when it happens (is the job being done better, faster, or with more ease?)

- The relevance of change – as and when it happens (is this the change that was intended?)

- How sustainable this change is over time (will a change seen immediately after training last for some period or will it be forgotten?)

This data is valuable, no doubt, but it is also more difficult to collect than in the first two levels.

Direct observation, on-the-job testing, assessments at regular intervals (immediately after training, a month, six months, and a year after training), and performance reviews are commonly used to determine if behavior has changed as a result of the training.

Level 4 – Results (Did the Training have a Measurable Impact on Learners’ Performance?)

Level 4 of training evaluation is considered the most important of all four levels, because it measures the impact of training on business and how it contributes to the success of the organization.

Several metrics can be used to measure the impact of training on business, depending on the type of training being evaluated. Some examples are:

- Sales figures

- Customer satisfaction ratings

- Turnover rate

Many organizations skip level 4 evaluation due to the time and expense involved, preventing informed decisions being made about training design and delivery.

A great way to do level 4 evaluation is by using two groups of employees with similar characteristics – a test group that goes through the training and a control group which doesn’t.

If the group that went through training delivers better metrics more than the one that didn’t, the results will be there for all to see.

Level 4 evaluation is the most complex, but it is also the most valuable. The data at this level provides great insights on whether training initiatives are really contributing to the business. If they are, then the value of the training is obvious; if not, then maybe you should relook at your entire training initiatives.

Wrapping Up

So that was all about the four levels of the Kirkpatrick Model of Training Evaluation in some detail. Each level presents only one piece of the complete picture and each level flows into the next, so to get the complete picture, effectiveness needs to be measured at every level for every course.

We will discuss more in the third part of this Kirkpatrick series. In the meanwhile, download this eBook on making a successful business case for eLearning implementation.